[ad_1]

Students and researchers who leaked Android’s hidden features have discovered a setting deep within the Android root files that enables Google Gemini directly from Google Search in a manner similar to Apple iOS. So the question arose: why is it there and can it be connected to common applications? The introduction of AI in search is rumored to happen in May 2024.

Gemini: What SEO can combat.

There are only rumors that some form of AI search will be rolled out. But here’s what the search community could expect if Google rolls out Gemini access as a standard feature.

Gemini is Google’s most powerful AI model and includes advanced training, technology, and features that far exceed existing models in many ways.

For example, Gemini is the first AI model to be natively trained to be multimodal. Multimodal means the ability to manipulate images, text, video, and audio and derive knowledge from each of the different forms of media. All previous AI models were trained to be multimodal using separate components, and then the separate parts were combined. According to Google, older methods of training multimodality did not work well for complex inference tasks. However, because Gemini is pre-trained with multimodality, it can have complex inference capabilities that exceed all previous models.

Another example of Gemini’s advanced features is the unprecedented scale of the context window. The context window is the amount of data that a language model can consider simultaneously to make decisions. The context window is one measure of how powerful your language model is. Context windows are measured in “tokens,” which represent the smallest units of information.

Context window comparison

- ChatGPT max context window is 32k

- GPT-4 Turbo context window is 128k

- Gemini 1.5 pro has a 1 million token context window.

With this context window in mind, Gemini’s context window allows you to process three full books of The Lord of the Rings or 10 hours of video and ask questions about it. By comparison, OpenAI’s best context window of 128k can account for 198 pages of Robinson Crusoe’s book, or about 1600 tweets.

According to Google’s internal research, the company’s advanced technology enables a context window of as much as 10 million tokens.

Leaked functionality is similar to iOS implementation

What we’ve discovered is that Android now includes a way to access Gemini AI directly from the Google app’s search bar, in the same way that’s available on Apple mobile devices.

The official documentation for Apple devices reflects that researchers have discovered hidden features in Android.

iOS Gemini access is described as follows:

“On your iPhone, you can chat with Gemini in the Google app.[Gemini]Just tap a tab to unlock a whole new way to learn, create images, and get help on the go. Interact through text, voice, images, and camera to get support in new ways. ”

Researchers who leaked Gemini features through Google Search have discovered that Gemini features are hidden within Android. Once this feature is enabled, a toggle will appear in the Google search bar, allowing users to swipe to access Gemini AI features directly, just like on iOS.

To enable this feature, you need to root your Android smartphone. This means accessing the operating system at the most basic level of files.

According to the person who leaked the information, one of the requirements for the toggle is that Gemini must already be enabled as a mobile assistant. To get the ability to turn Google app features on and off, you’ll also need to install an app called GMS Flags.

The requirements are:

“What you need is–

Rooted device running Android 12 or later

Latest beta versions of Google apps from Play Store or Apkmirror

The GMS Flags app is installed with root privileges. (GitHub)

Gemini should already be available in Google apps. ”

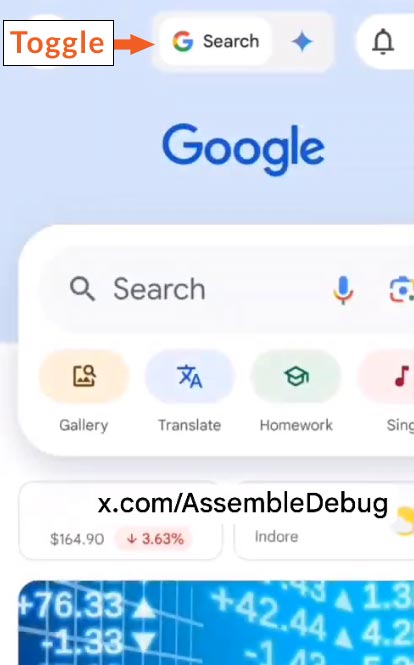

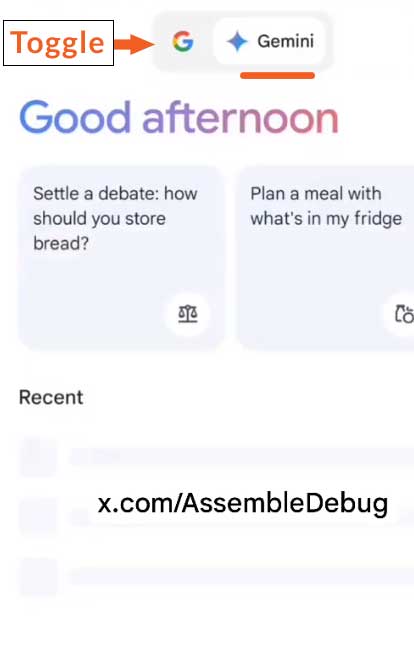

Screenshot of the new search toggle

Screenshot highlighting the “Toggle” button in the user interface with a red arrow pointing to it. You’ll see a Google search bar in the background, with snippets of financial apps at the bottom.

Screenshot highlighting the “Toggle” button in the user interface with a red arrow pointing to it. You’ll see a Google search bar in the background, with snippets of financial apps at the bottom.Screenshot of Gemini activated by Google Search

The person who discovered the feature tweeted:

“Google apps for Android will soon be able to switch between Gemini and search [just like on iOS]”

Google apps for Android will soon be able to switch between Gemini and search [just like on iOS]

📝Read – https://t.co/eMgD2NxZKX#Google #Android pic.twitter.com/i19Msjb8wm

— Assemble Debug (@AssembleDebug) April 7, 2024

Will Google announce the official rollout of SGE?

Google is rumored to be planning to announce the official rollout of Google Search Generative Experience at its I/O conference in May 2024, and Google regularly announces new features being introduced to search (among other announcements). is being announced.

Eli Schwartz recently posted on LinkedIn about the rumored SGE rollout:

“That date was not provided by Google PR. However, as of last week, this is the internally scheduled launch date. Of course, the schedule is subject to change, considering there are still 53 days to go. There have been multiple release dates missed throughout the last year.

…It’s also important to spell out what exactly we mean by “launch.”

Currently, unless you are participating in a beta experiment, the only way to see SGE is if you opt in to the lab.

Launching means showing SGE to people who haven’t opted in, but the scale can vary widely. ”

It’s unclear whether this hidden toggle is a location marker for a future version of the Google Search app, or whether it’s something that will enable the rollout of SGE with future data.

However, this hidden toggle could be a potential for those interested in how Google will roll out an AI-based front end to search, and whether this toggle is in some way a connector to that feature. provides a clue.

Read how to root to enable Gemini in Android search.

How to enable the Materials bottom navigation search bar and Gemini toggle in Google Discover on Android [ROOT]

List of OpenAI context windows

Featured image by Shutterstock/Mojahid Mottakin

[ad_2]

Source link